- Red Hat Community

- :

- RH294 - Red Hat Linux Automation with Ansible

- :

- Forum

- :

- Re: import_tasks vs include_tasks

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 23.6K Views

import_tasks vs include_tasks

Complex Ansible playbooks can be like a tangled web of tasks. If you're not the one who wrote it, it can be difficult to understand the logic of the playbook and to find the specific tasks that you need to modify. To make these playbooks more readable and maintainable, you can divide the tasks into separate files. This will help to untangle the web and make it easier to understand the playbook.

One of the method is to reuse a play book and include or import it into your play book for the very simple use of not writing everything from scratch using either import_tasks or include_tasks.

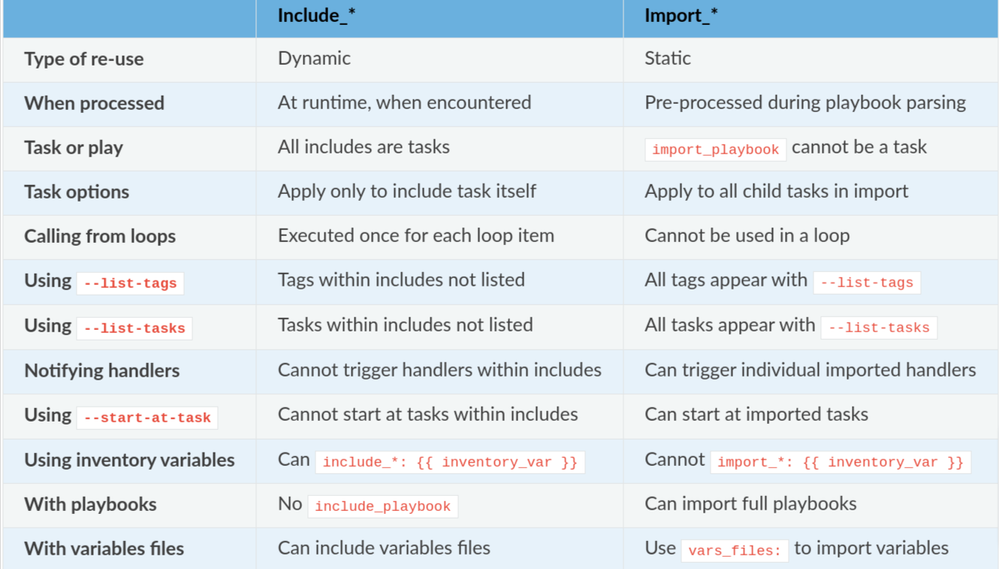

Import tasks are pre-processed at the time the playbook is parsed, while include tasks are processed as they are encountered during the execution of the playbook. This means that import tasks are evaluated once, at the beginning of the playbook, while include tasks are evaluated each time they are encountered.

Let’s understand this using the following cases :

we have 3 yaml files :

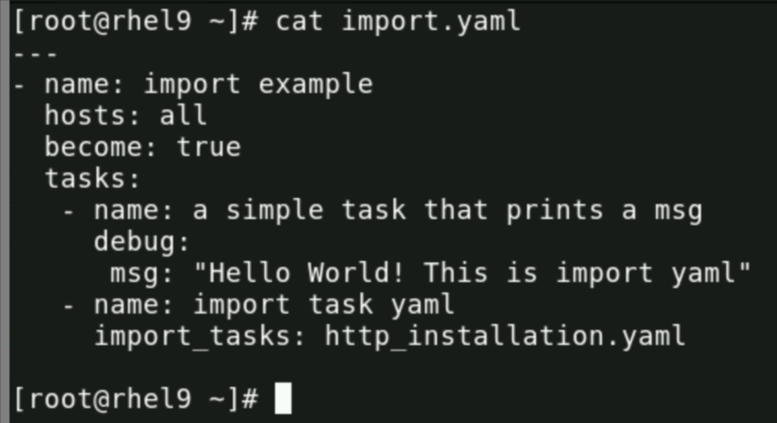

1. Import.yaml :

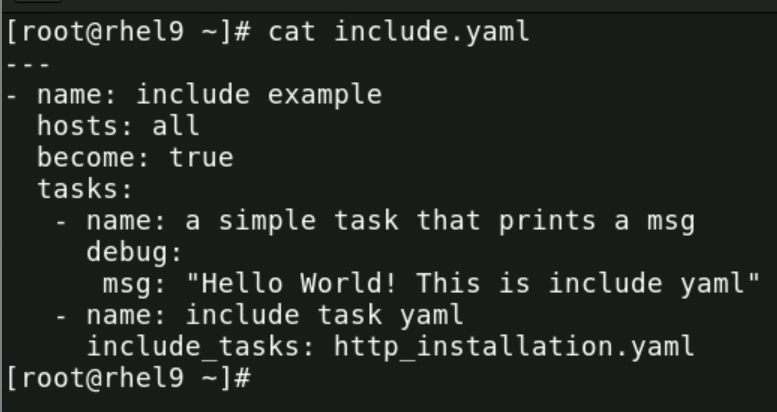

2. Include.yaml :

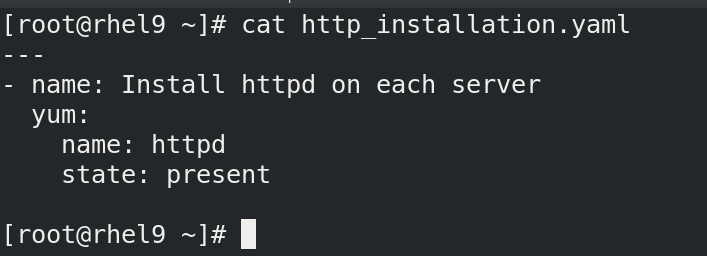

3. http_installation.yaml :

****************************************************************

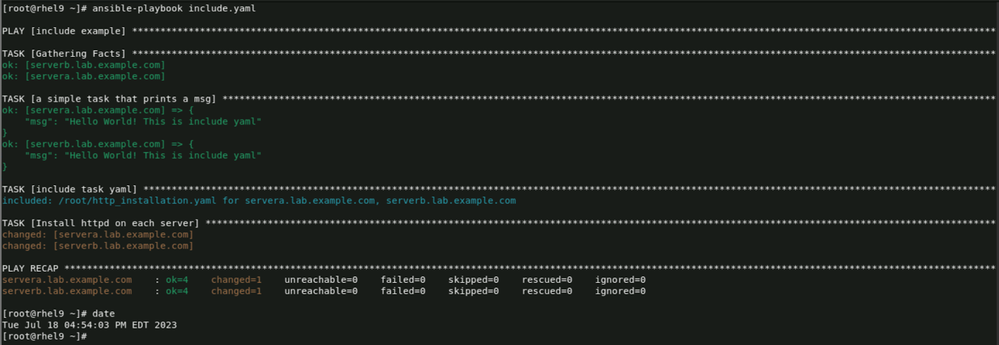

Now let us execute the playbook one by one : starting from include.yaml

Here once can see that total of 4 tasks are executed :

- Gathering the facts

- Task printing Hello world

- A task being included

- Execution of task which was inside the included playbook file

One can easily infer that task 4 was the result of the included task 3. And it happened as it was encountered during run time processing ( NOT pre processed ).

*************************************************************************************************

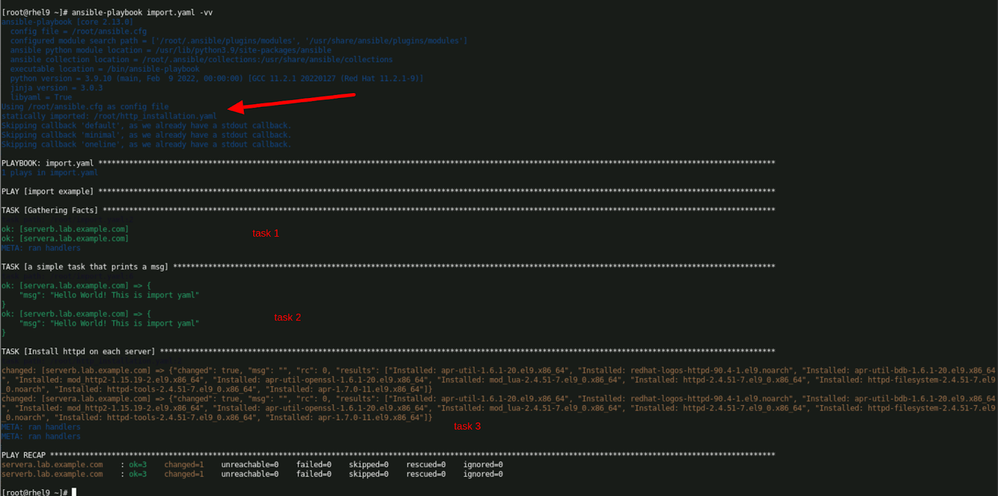

Now we will run the import.yaml :

So, only 3 tasks got executed and not 4 like we got in include.yaml case.

Notice the arrow - it points at message : statically imported http_installation.yaml

Which means import_tasks is importing / including its tasks yaml when playbook execution happens. At the very beginning, pre-processed at playbook parsing.

Hence, we can conclude that : IMPORT is a STATIC operation while INCLUDE is a DYNAMIC operation.

Let’s refer to the summary provided at : https://docs.ansible.com/ansible/devel/playbook_guide/playbooks_reuse.html#dynamic-vs-static

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 790 Views

assume role issues thru codepipeline

Hey Trev, appreciate it,

******************************************

An exception occurred during task execution.

To see the full traceback, use -vvv.

The error was: botocore.exceptions.ClientError: An error occurred

(AccessDenied) when calling the DescribeStacks operation:

User: arn:aws:sts::3081:assumed-role/AWSAssumeRoleforCommonPipeline/enc-common-pipeline-session

is not authorized to perform: cloudformation:DescribeStacks on resource:

arn:aws:cloudformation:ap-southeast-2:3081:stack/twe-enc-common-3081/7cab40a0-f15b-11ee-a816-0a91bc59e3ff

because no identity-based policy allows the cloudformation:DescribeStacks action

- « Previous

-

- 1

- 2

- Next »

Red Hat

Learning Community

A collaborative learning environment, enabling open source skill development.