- Red Hat Community

- :

- RH442 - Red Hat Performance Tuning: Linux in Physical, Virtual, and Cloud

- :

- Forum

- :

- Re: Networking Latency and Throughput

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 5,345 Views

Networking Latency and Throughput

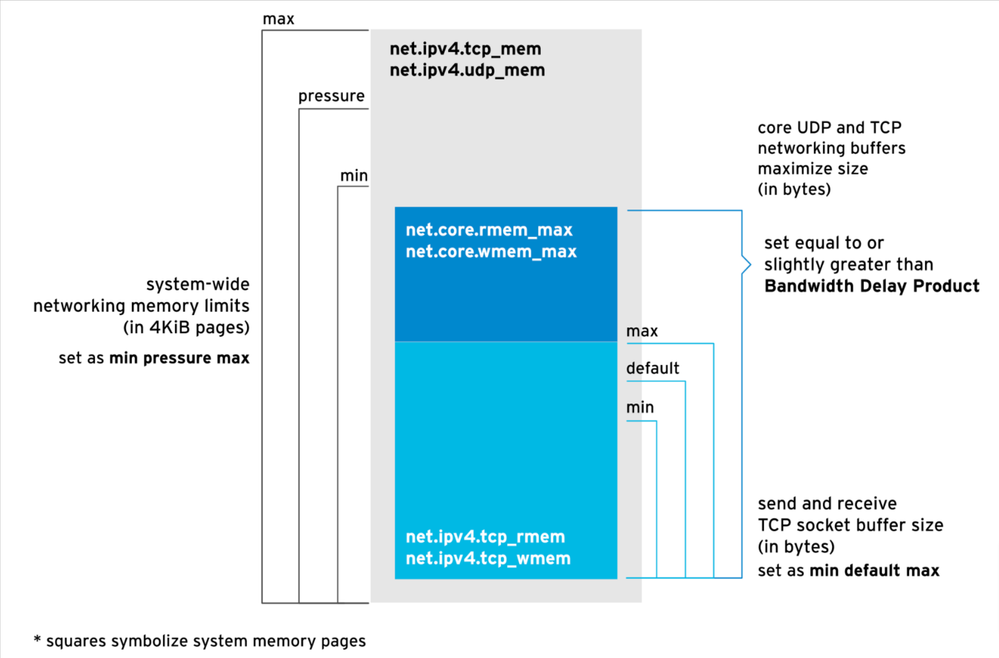

What is the difference between core networking maximum receive buffer ( net.core.rmem_max ) and the maximum TCP receive buffer size (net.ipv4.tcp_rmem) and how and why setting them both to BDP increases network performance ?

Will not it affect the memory utilisation and will help system slowing down ? Would love to know if anyone can provide their inputs on this.

Not sure if this diagram is self explanatory :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 5,164 Views

Hi,

If you use high speed network with low latency(like as 10G,40G+100G) you could check it.

I found the following document:

The settings net.core.rmem_max and net.ipv4.tcp_rmem in a Linux system are related to the configuration of network buffer sizes, but they serve different purposes:

net.core.rmem_max: This parameter sets the maximum receive buffer size (in bytes) for a socket. It's a system-wide limit for all TCP sockets. This buffer is used to temporarily store data received from the network until it is read by the application.

net.ipv4.tcp_rmem: This parameter, on the other hand, controls the default and maximum sizes for receive buffers specifically used by TCP sockets. It usually has three values: the minimum size, default size, and maximum size. The TCP stack uses these values to automatically tune the receive buffer size for individual TCP connections, based on the network conditions.

Setting both of these parameters to the Bandwidth-Delay Product (BDP) can enhance network performance, particularly in high-bandwidth or high-latency environments. BDP is a measure of how much data can be "in flight" on the network. It's calculated as the product of the link's capacity (bandwidth) and its round-trip time (latency). By setting the buffer sizes to match the BDP, you ensure that the TCP window scales appropriately, allowing more data to be sent before requiring an acknowledgment. This can significantly improve throughput, especially in long-distance or high-capacity network connections."

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 5,164 Views

+

However, there are trade-offs:

Memory Consumption: Increasing buffer sizes will consume more memory. If many connections are open simultaneously, or if the system is memory-constrained, this could lead to issues. It's important to balance buffer sizes with the available system resources.

System Slowdown: If not configured correctly, large buffer sizes can lead to increased latency and even TCP bufferbloat, where excessive buffering in the network leads to high latency and jitter. This is counterproductive and can degrade the quality of real-time applications like VoIP or gaming.

In summary, setting net.core.rmem_max and net.ipv4.tcp_rmem to match the BDP can optimize network throughput under certain conditions, but it must be done carefully to avoid excessive memory usage and potential system slowdowns. It's often a matter of finding the right balance based on specific network and system characteristics.

For more information about it:

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/monitoring_and_managin...

Red Hat

Learning Community

A collaborative learning environment, enabling open source skill development.