- Red Hat Community

- :

- RH294 - Red Hat Linux Automation with Ansible

- :

- Forum

- :

- Re: import_tasks vs include_tasks

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 23.1K Views

import_tasks vs include_tasks

Complex Ansible playbooks can be like a tangled web of tasks. If you're not the one who wrote it, it can be difficult to understand the logic of the playbook and to find the specific tasks that you need to modify. To make these playbooks more readable and maintainable, you can divide the tasks into separate files. This will help to untangle the web and make it easier to understand the playbook.

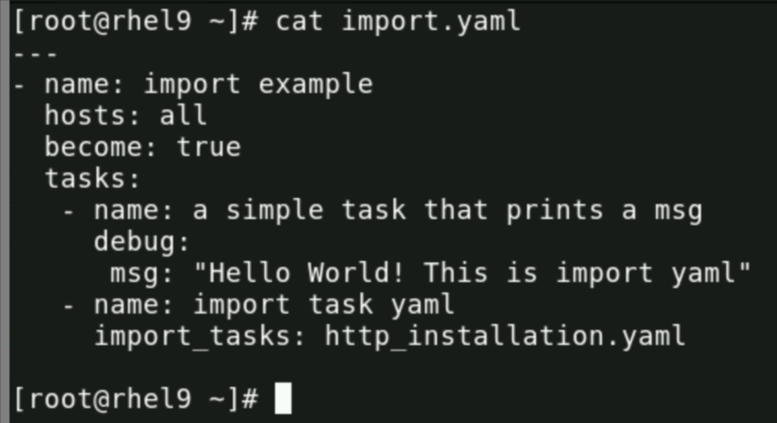

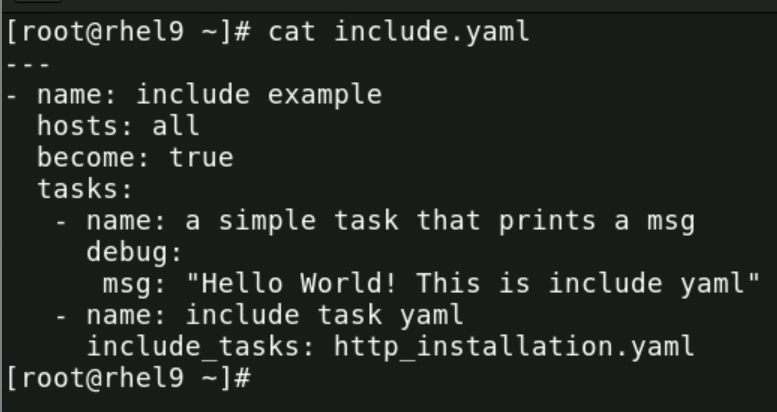

One of the method is to reuse a play book and include or import it into your play book for the very simple use of not writing everything from scratch using either import_tasks or include_tasks.

Import tasks are pre-processed at the time the playbook is parsed, while include tasks are processed as they are encountered during the execution of the playbook. This means that import tasks are evaluated once, at the beginning of the playbook, while include tasks are evaluated each time they are encountered.

Let’s understand this using the following cases :

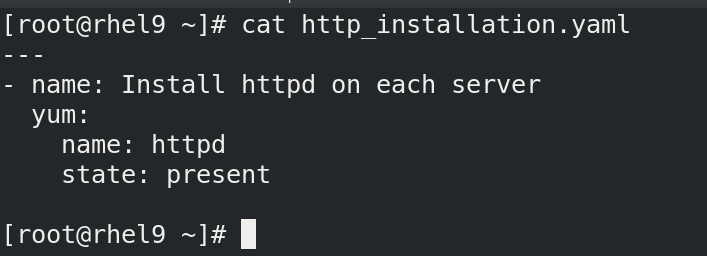

we have 3 yaml files :

1. Import.yaml :

2. Include.yaml :

3. http_installation.yaml :

****************************************************************

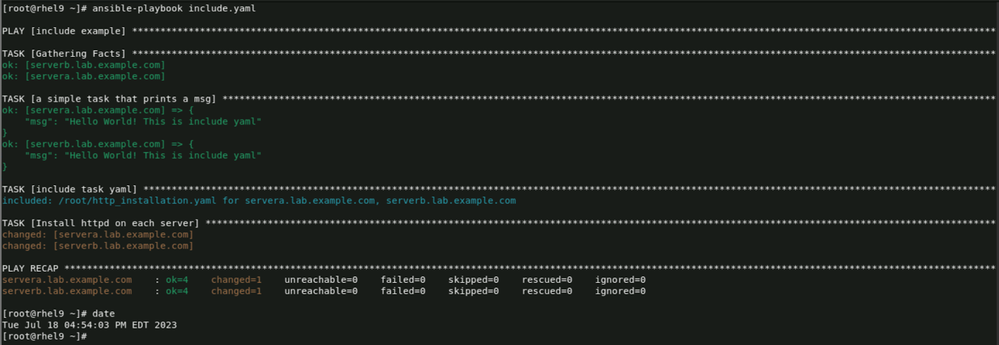

Now let us execute the playbook one by one : starting from include.yaml

Here once can see that total of 4 tasks are executed :

- Gathering the facts

- Task printing Hello world

- A task being included

- Execution of task which was inside the included playbook file

One can easily infer that task 4 was the result of the included task 3. And it happened as it was encountered during run time processing ( NOT pre processed ).

*************************************************************************************************

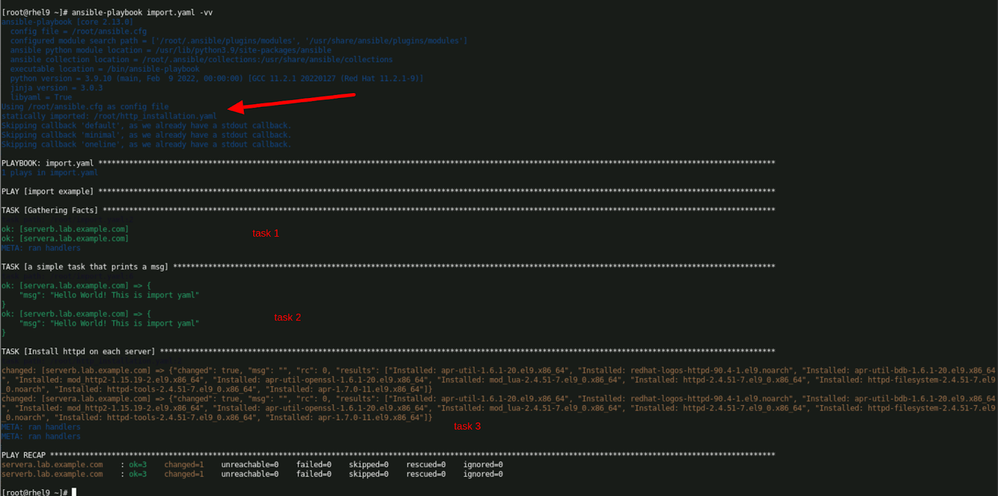

Now we will run the import.yaml :

So, only 3 tasks got executed and not 4 like we got in include.yaml case.

Notice the arrow - it points at message : statically imported http_installation.yaml

Which means import_tasks is importing / including its tasks yaml when playbook execution happens. At the very beginning, pre-processed at playbook parsing.

Hence, we can conclude that : IMPORT is a STATIC operation while INCLUDE is a DYNAMIC operation.

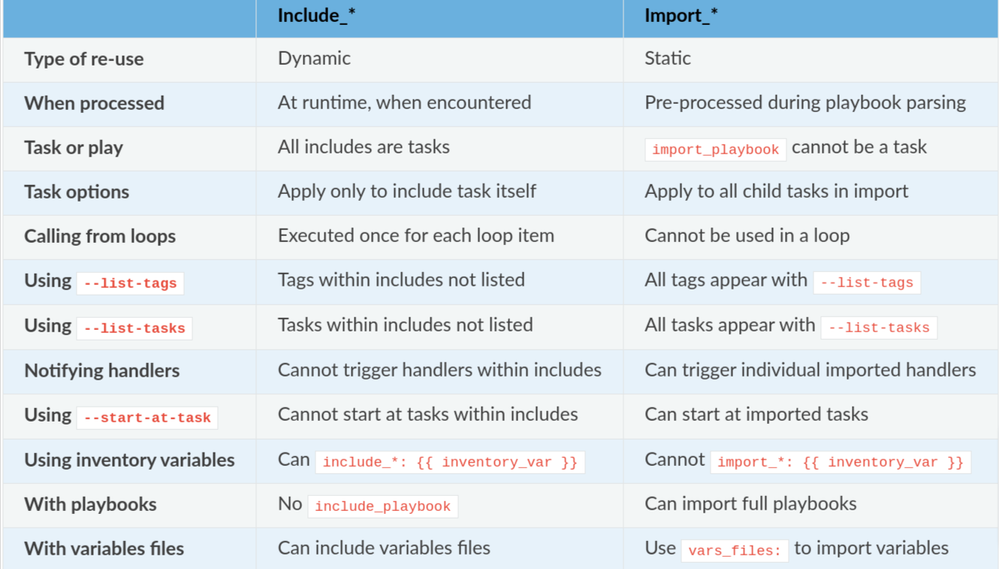

Let’s refer to the summary provided at : https://docs.ansible.com/ansible/devel/playbook_guide/playbooks_reuse.html#dynamic-vs-static

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 22.3K Views

Great example to demonstrate the difference - but could do with some tidying up - seems to be covered in HTML non-breaking spaces ( ) making it hard to read and missing the output of the second play.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 22.3K Views

Yes I broke it editing from my mobile. Now seems ok .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 22.2K Views

I know the focus of this very nice writeup was on "static" vs "dynamic" operation,but I thought I would add a subtle point, that could potentially cause heartburn - the location of the file(s) to be imported and/or included. Unless otherwise explicitly specified in the playbook, Ansible expects any files, that are to be imported or included, to be in the same directory as the playbook itself. If the file(s) to be included or imported reside in a different directory than the playbook, a pathname - absolute or relative - must be specified to the file(s).

To provide an example with this explanation, consider the following:

- a playbook named "play4" is in the directory /home/tlc/plays

/home/tlc/plays/play4

- a file named task1.yml to be included or imported is in the directory /home/tlc/plays

/home/tlc/plays/task1.yml

In this instance, it will be sufficient to only specify the name of the file to be

included or imported - which is what was shown by Chetan in his initial post For example:

- import_tasks: task1.yml

or

- include_tasks: task1.yml

Now consider the example where a directory named "files" is created in the same directory as the playbook, and the file to be included or imported, exist in that new

directory. See the pathname below:

/home/tlc/plays/files/task1.yml

In this instance, it is no longer sufficient to specify the filename alone when using

the include_tasks and import_tasks statements. Doing so will result in an error when the playbook executes. To have the playbook properly reference the location of the file, "task1.yml", so that it can be imported or included, an absolute or relative pathname must be specified in the include_tasks and/or import_tasks statements. See the examples below:

- include_tasks: /home/tlc/plays/files/task1.yml -> absolute pathname

or

- include_tasks: files/task1.yml -> relative pathname

Same syntax when using the import_tasks statement:

- import_tasks /home/tlc/plays/files/task1.yml -> absolute pathname

or

- import_tasks files/task1.yml -> relative pathname

Okay, I'd better stop here. Too much more, and my comment about this being a subtle point will seem misplaced. Afterall, this was only intended to be chocolate syrup on top of the ice cream

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 22.2K Views

Many thanks for the add-up @Trevor !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 19.1K Views

hey trevor, what happening here then?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 19.1K Views

So looking at this you have the following ...

This Runs when it gets to it in Tasks

https://rhtapps.redhat.com/verify?certId=111-134-086

SENIOR TECHNICAL INSTRUCTOR / CERTIFIED INSTRUCTOR AND EXAMINER

Red Hat Certification + Training

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 19K Views

appreciate help on this Travis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 19K Views

Again, there are a few things here ...

You've got a variable named assume_role_name. The value of that is AWSAssumeRoleforCommonPipeline. There is nothing here using that except for maybe the list of tasks called _assume_role.yml which should be in the same location as your playbook.

I suspect that the _assume_role.yml task leverages the variables mentioned here and attempts to use a role named AWSAssumeRoleforCommonPipeline but you also need to ensure that there is a role installed named AWSAssumeRoleforCommonPipeline otherwise it won't run because it can't find the role.

There might be some confusion here between Roles, Tasks, what it is to include_roles or include_tasks. When you run a role, you aren't getting just tasks/main.yml you are getting all parts of the role that would run. It is possible to just include tasks if that is the end goal, but it is very unclear (at least to me) what you're trying to accomplish.

The first playbook (ignoring the formatting) executed a ROLE called roles/common.lambda_layers then it went to the tasks section and executed the tasks in order, so it would have run task(s):

- include_tasks: _assume_role.yml

then after that ran it would have executed the next ROLE

https://rhtapps.redhat.com/verify?certId=111-134-086

SENIOR TECHNICAL INSTRUCTOR / CERTIFIED INSTRUCTOR AND EXAMINER

Red Hat Certification + Training

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 19K Views

Okay, I'm off of my sabattical, and it's time for me to get

back out here.

keg, help me to unpack your query. What specifically

are you asking about?

Red Hat

Learning Community

A collaborative learning environment, enabling open source skill development.